Category: Content Strategy

-

Whitepaper on How to Optimise for AEO and GEO

Download for free! Below are the details of 2-month experiment I undertook, to understand what affects AI answers in ChatGPT, Gemini, Perplexity, Copilot and Google.

-

Selling to the Boss

1. Awareness — How many SDMs are aware of your brand? The first question for any marketing team is simple but fundamental: who actually knows we exist? Awareness is about building mental availability among senior decision-makers (SDMs) — the people who influence budgets, sign contracts, or shape vendor lists. To grow awareness: The goal isn’t…

-

Building a Private Chatbot

Project I built a system that lets me have a real conversation with everything I’ve ever written — an AI version of myself I call Mini Me. Powered by Pinecone, OpenAI, and ResembleAI Built from years of strategy notes and presentations Exploring how voice, brand, and knowledge converge Let’s talkSee how it works On this…

-

Spreadsheet for measuring and tracking how well you are doing in AI searches

What I have now heard from a couple of recent clients, is the importance of actually tracking progress in AI search. As with any parts of marketing you want to creating great messaging material, content and so on; but you also need to know whether that effort is actually working or not. I use a…

-

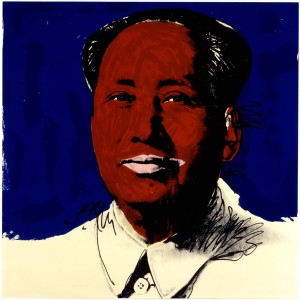

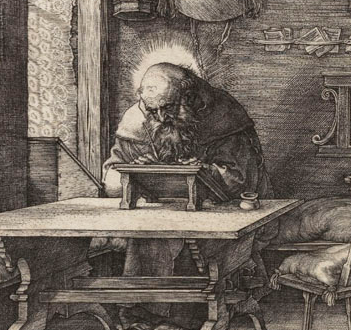

Focus on quality – lessons from Zen and the Art of Motorcycle Maintenance

Introduction What is “quality” content? If you’ve ever read Zen and the Art of Motorcycle Maintenance by Robert Pirsig, you’ll know his reflections on craftsmanship go far beyond fixing bikes. As he wrote: “[In a craftsman], the material and his thoughts are changing together in a progression of changes until his mind’s at rest at…

-

-

Stage 3 – going beyond keyword search

When building search tools, intelligent assistants, or AI-driven Q&A systems, one of the most foundational decisions you’ll make is how to retrieve relevant content. Most systems historically use keyword-based search—great for basic use cases, but easily confused by natural language or synonyms. That’s where embedding-based retrieval comes in. In this guide, I’ll break down: The…

-

Stage 2 – making sense of the chaos

This is the part where all the content sources came together into a centralized system I could actually interact with. This post is a cleaned-up record of what I built, what worked, what didn’t, and what I planned next. If you’ve ever tried to unify fragmented notes, decks, blogs, and structured documents into a searchable…

-

Making decisions in a Bayesian world

Most of your time as a marketing leader is spent trying to make decisions with inadequate data. In an ideal world, we would have run an A/B test on everything we wanted to do, looked at the numbers and then made a decision. Which image should we use for our new advert? What message? What…

-

Everything So Far

All of my PowerPoints so far. Most of what I know about marketing up to this point!

-

-

Slaying a few marketing myths

We’ve been doing some digital marketing work recently and the more and more time I spend on digital work the more beasts I feel need to be slain. NB: I’m talking specifically about B2B marketing here – which is important. It’s important because many of the problems that B2B marketers face come from taking a…

-

How to add ChatGPT to your own website

There are many stages of exploring ChatGPT: I’ll talk about the first four points here and then, in the next article, the last point. This is a considerably bigger task, so needs a post of its own. The end goal is to allow customers and potential customers to come to your site and ask questions…

-

The Marketing Flywheel

New Year, new marketing plans. Hopefully by now you’ve kicked off various activities and you’re waiting to see how those early campaigns are working out. The other thing I see in marketing departments at this time though is burnout. Everyone is trying to do everything either because there’s no real strategy there (“let’s throw everything…

-

Five Myths About The Marketing Revenue Engine

I love the book Rise of the Revenue Marketer. In it Debbie Qaqish describes the need for a change program to move your marketing department from being a cost centre (“We’re not sure what marketing do, but we need them to do the brochures”), to a revenue centre (“They’re responsible for generating a significant proportion of our company’s…

-

Building a MarTech Stack at a Small Organisation

I recently spoke at the B2B Ignite conference in London on “Building a MarTech Stack at a Small Organisation: A Real World Example of What’s Worked and What Hasn’t”. Here are my slides from that talk. Rules of Thumb It’s a lot of pictures, so might be hard to understand without the actual talk! Any…

-

Measuring Outbound vs. “Always-on” Marketing Performance

Whenever I meet customers I always slip in a marketing question or two along the lines of “Where did you hear about us? What brought you in to Redgate?”. One of the answers from a couple of months back was: Well a year ago, I got a new boss and she told me that I had…

-

There are Three Types of Marketing – Inbound, Outbound and… Plain Rude

Reading one of the many number of content marketing pieces from HubSpot, I noticed the following from a basic piece on What is Digital Marketing?, after paragraphs about the virtues of Inbound marketing techniques: Digital outbound tactics aim to put a marketing message directly in front of as many people as possible in the online…

-

Write Content That People Actually Want to Read

This feels like a pointless blog post – the think I’m going to say seems so obvious, I shouldn’t need to say it. Still, I see examples where this doesn’t happen, so perhaps it’s worth re-iterating the point. Here’s the incredible insight – if you want people to read content that you write, then it…

-

Dissecting Thought-Leadership

To start, I don’t really like the term “Thought-Leadership”. Like many things in marketing, it’s a bit too “marketing-ey”. It also has echoes of NLP, something I’m not a big fan of, to say the very least. But, I guess it’s pretty descriptive for what it means – I’d define it as something along the…

-

Is it the Content or the Author that Matters for a Blog?

If, like me, you subscribe to a lot of marketing RSS feeds, then you can’t have failed to notice the almost overwhelming proportion of posts about content marketing/SEO and how choosing appropriate and useful content for, say, your blog is a killer way of drawing in early stage leads. This SEOMoz post is a good…