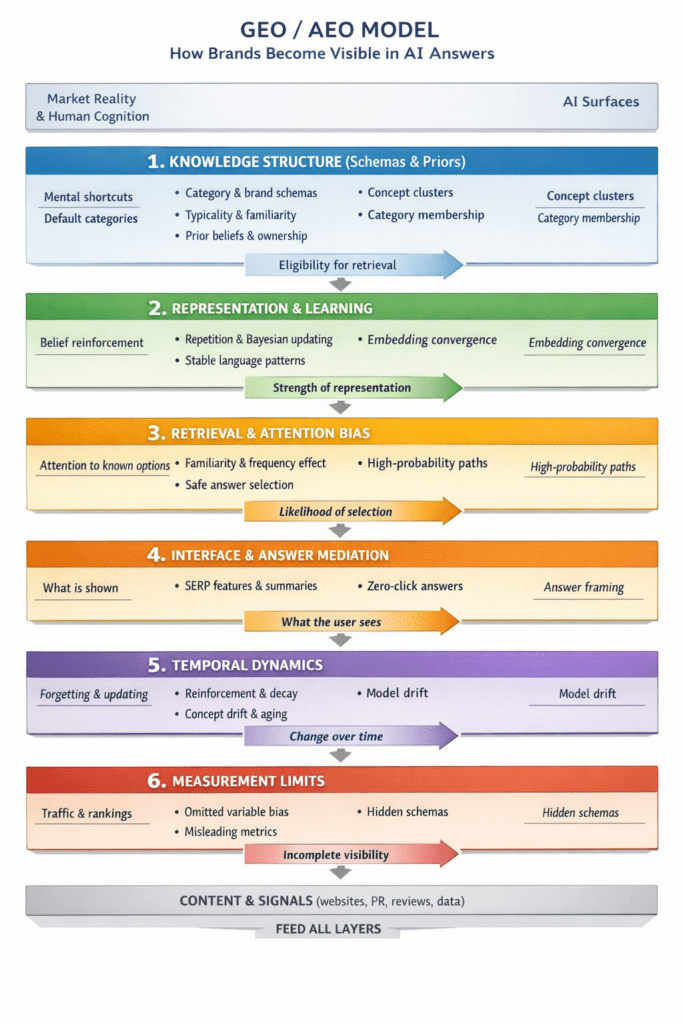

As AI systems increasingly mediate how people discover and assess brands, visibility is no longer a function of ranking alone (the model we have all been used to for years). Before a brand can appear in an AI-generated response, it must first be legible to the system. It needs to be recognised as a coherent member of a category, consistently represented across contexts, and safe to select as a default answer.

This perspective draws directly on established work in marketing and cognitive science. Schema-based brand research shows that consumers do not evaluate brands as bundles of isolated attributes, but as organised mental structures with defaults and expectations (see Halkias).

Empirical studies of consumer search further demonstrate that familiarity and prior ownership shape attention long before an explicit query is made (see Ursu et al.). Large language models inherit these same structural biases because they are trained on human language, not abstract truth.

The model below captures this as a six-layer stack. These layers run from knowledge structure and learning at the base, through retrieval bias and interface mediation, to time-based decay and the limits of measurement at the surface.

Most marketing effort concentrates on the surface layers. In practice, AI visibility is largely determined much further down the stack.

This is part of a series, the end goal of which is to explain why you need a more analytical approach to marketing implementation and the importance of moving away from trying to do everything all at once with limited resource. And most impotantly that the decisions you make aren’t based on gut instinct, but on sound scientific theory.

References

Halkias, G. Mental representation of brands: a schema-based approach to consumers’ organization of marketing knowledge. Journal of Product & Brand Management.

Ursu, R. M., Erdem, T., Wang, Q., Zhang, Q. Prior information and consumer search: Evidence from eye-tracking.

Vaswani, A. et al. Attention Is All You Need.

Erdem, T. Decision Making under Uncertainty: Capturing Dynamic Brand Choice Processes.

Erdem, T. Industrial Marketing as a Bayesian Process of Belief Updating.