The Eightfold Path of AI

Step Three – Deep Learning and Representation

Stage 1 and stage 2 of the Eightfold Path showed how computation and data laid the foundations for machine learning. But neither traditional algorithms nor early statistical models could produce the depth of understanding required for general-purpose intelligence. Their representations were too shallow, their flexibility too limited, and their ability to capture complexity constrained by both data and architecture. Stage Three marks the moment when AI systems began to develop richer internal structures – discovering hierarchies, abstractions, and latent features that no human explicitly specified.

Deep learning shifted the field away from hand-engineered features and toward end-to-end learning. Instead of telling a model what to look for, researchers built networks capable of discovering structure directly from data. This represented not just a technical breakthrough but a conceptual one. Intelligence was no longer defined as rules or even patterns. It became the process of learning representations – internal models of the world that allow systems to generalise far beyond their training examples.

During this period, the leap in performance was dramatic. Vision models surpassed hand-crafted image descriptors. Speech recognition improved rapidly. Text models moved from bag-of-words to distributed embeddings. For the first time, AI systems demonstrated the ability to understand hierarchical structure, not just surface-level regularities. Understanding this transition is essential because it set the trajectory for everything that followed: the transformer architecture, multimodal models, and the rise of large-scale foundation systems.

A period of very heavy development dreating the building blocks of modern AI. I took a course at the Cambridge Judge Business School while this innovation was happening

From Features to Representations

Before deep learning, machine learning relied heavily on feature engineering. Humans decided which characteristics mattered:

- edge detectors in vision

- part-of-speech tags for language

- frequency counts in documents

- handcrafted behavioural features for forecasting

Models could only reason with the features they were given. The intelligence therefore lived outside the system – in the mind of the engineer who designed the features.

Deep learning inverted this logic. Instead of providing features, researchers built layered neural networks that could learn them automatically. Each layer extracted a more abstract representation of the input, transforming pixels into edges, edges into shapes, shapes into objects, or transforming word tokens into semantic vectors that captured meaning rather than form.

This was a profound shift. The model began to build its own internal language for describing the world.

Although deep learning dates back several decades, its modern success emerged through breakthroughs in:

- activation functions

- backpropagation scaling

- GPU compute

- larger datasets

- effective regularisation

These improvements allowed networks to reach depths where abstract representations emerged naturally. The important point is conceptual: once models could learn features instead of receiving them, they became capable of capturing complexity far beyond what any engineer could specify by hand.

The Role of Data Scale

Deep learning is inseparable from data. Unlike earlier statistical models, neural networks require scale – both in the number of parameters and in the diversity of examples. As datasets grew through the 2000s and early 2010s, networks learned increasingly rich structures. In language, this meant models could capture nuance rather than rely on simple token frequencies. In vision, models could generalise across poses, lighting conditions, and distortions.

This scaling dynamic is worth highlighting. Traditional machine learning improved marginally as data increased. Deep learning improved dramatically. The relationship was non-linear. More data produced qualitatively better representations, not just refined ones.

During my early encounters with neural networks in the late 1990s, the potential was already visible, but the data was insufficient. Networks were forced into shallow structures and narrow tasks. As larger datasets became available a decade later, the same principles suddenly unlocked capabilities that had long been theoretical.

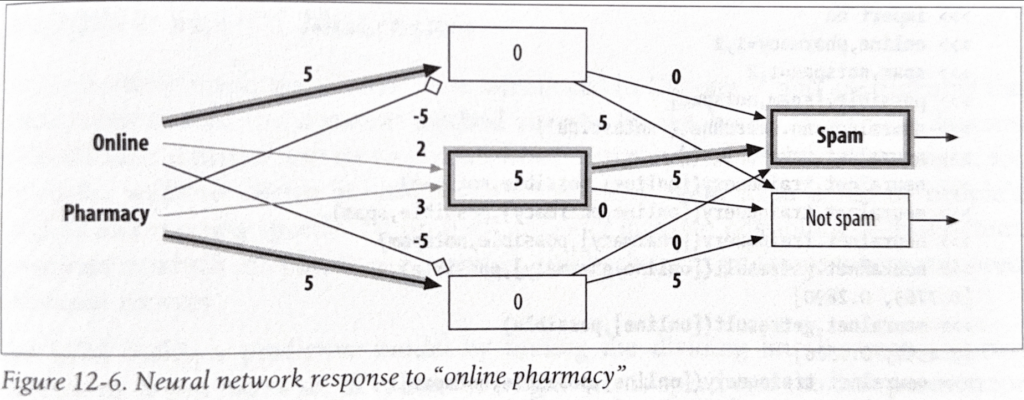

Simple NN structure

Why Deep Learning Behaved Differently

To understand Stage Three, it helps to examine why deep networks outperform classical models.

Hierarchical Abstraction

Neural networks build representations layer by layer. Early layers capture simple structure. Deeper layers encode complex concepts. This mirrors aspects of human perception, where the visual cortex processes edges, shapes, and objects in successive stages.

Smooth, Distributed Representations

Deep learning replaces discrete categories with continuous vector spaces. This allows:

- interpolation

- similarity measurement

- compositional reasoning

A word is no longer a symbolic token; it is a point in a multidimensional space capturing its relationships to other concepts.

End-to-End Learning

Instead of optimising each component separately, deep networks learn the entire mapping from input to output. This makes the system more adaptive and reduces the brittleness seen in manually engineered pipelines.

These behaviours laid the groundwork for modern embeddings – the basis of today’s generative models and retrieval systems.

Applications and Their Significance

Deep learning entered the world through practical tasks long before it reshaped general AI. Key domains included:

- computer vision – object detection, image classification, segmentation

- speech recognition – acoustic modelling, phoneme classification

- language modelling – distributed word embeddings, recurrent neural nets

- recommendation systems – latent factor models

- forecasting and anomaly detection – richer patterns than linear models could capture

These systems demonstrated something new: models could generalise beyond narrow feature sets. They could learn from examples rather than rely on constrained, domain-specific logic.

This shift had significant implications for industry. Companies began to rethink how intelligence was embedded in products. Marketing systems started to incorporate deeper behavioural models, enabling more accurate predictions and more nuanced understanding of customer behaviour. But these were still narrow systems. They lacked the coherence and reasoning capabilities associated with general-purpose models.

Deep learning provided the representational machinery — the ability to encode the world — but not yet the architectural innovation that would unify these representations into something more flexible.

Setting the Stage for the Transformer

Stage Three ends at the moment when deep learning had proven its power but had not yet unified its approaches. Architectures like convolutional neural networks and recurrent networks excelled in specific domains but struggled with long-range dependencies, multimodal inputs, and generality.

The field needed a way to handle sequence information more efficiently, to process variable-length inputs without recurrence, and to leverage parallel computation. That breakthrough arrived in 2017 with the transformer architecture – the defining moment of Stage Four.

Deep learning provided the layers. Transformers provided the structure that allowed those layers to scale.

Summary

- Deep learning replaced feature engineering with learned representations.

- Hierarchical abstraction enabled models to capture complex patterns.

- Scaling data and compute dramatically improved performance.

- Applications matured across vision, speech, and language.

- Stage Four introduces the transformer, which unified and extended these capabilities.