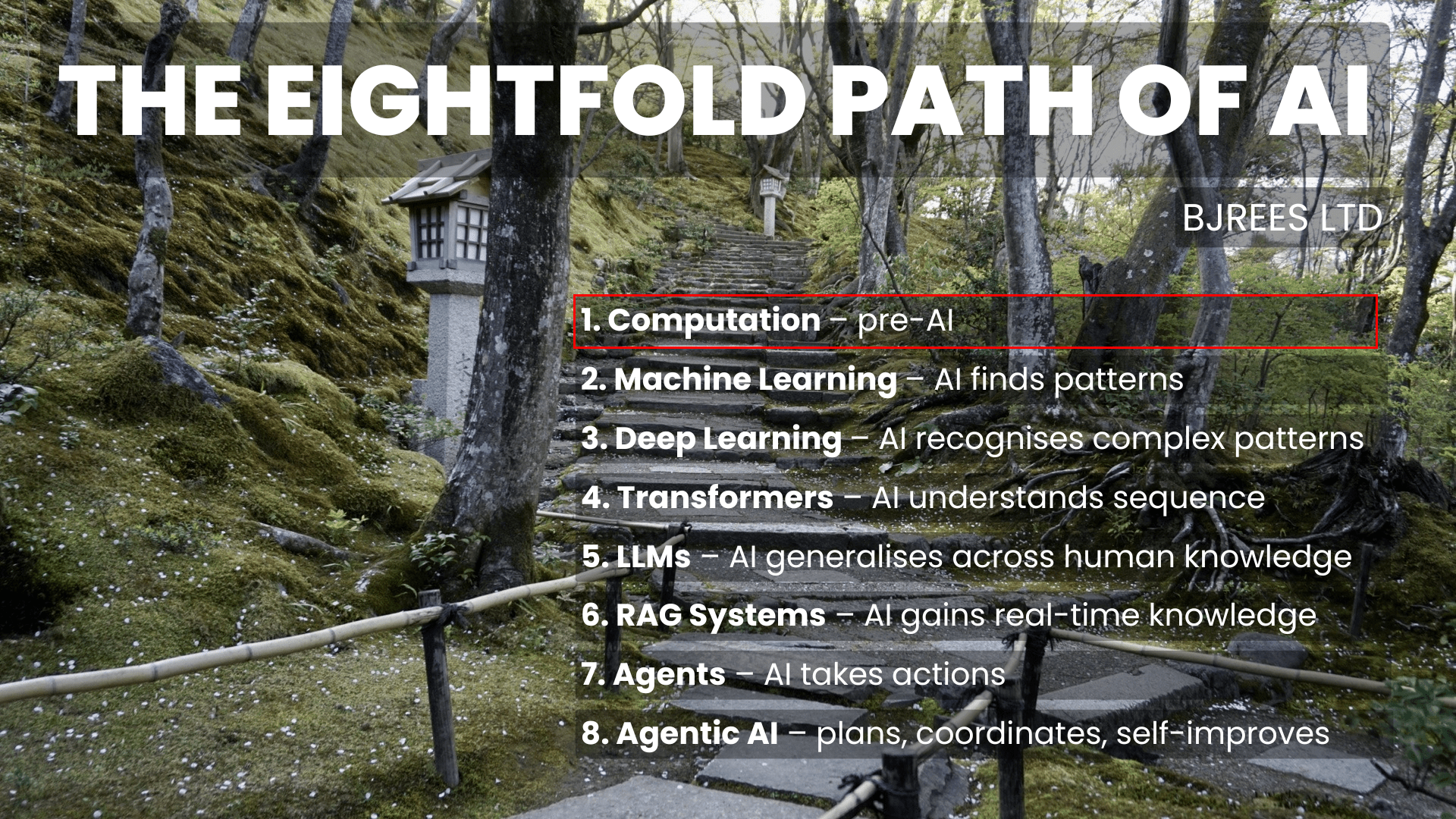

The Eightfold Path of AI

Step One – Pre-AI

Foundation

I’ve spent decades moving through different layers of the technology world – from early programming in the 1990s, to studying neural networks at King’s College London, to applying machine learning in the 2000s, and then returning to AI when the Transformer architecture changed everything in 2017. Along the way, I found myself coming back to the same question: What is the simplest way to understand this field?

AI discussions often jump straight into models, benchmarks, or hype. But underneath all of it is a very clear sequence – a ladder of ideas that build on one another. The Eightfold Path of AI distils that sequence into eight steps. It’s not quite an historical timeline or a technical deep dive. It’s a mental model: a way to understand why AI works, why it changed so suddenly, and where it is heading next.

The Historical Constraint

IBM TPF FTW – for decades computational power limited any possibility of developing AI systems, though building basic multilayer perceptrons was possible even on quite basic hardware

For decades, limited computation defined what AI could be.

- Early symbolic systems in the 1950s and 60s weren’t failing because the ideas were bad; they were failing because computers were simply too slow.

- Neural networks – the ancestors of today’s models – already existed in conceptual form by the 1980s. They remained largely theoretical because the hardware wasn’t capable of training networks of meaningful scale.

- As recently as the early 2000s, training even a modest machine-learning model could take days or weeks.

We often imagine that AI breakthroughs came from clever algorithms alone, but actually it is computational power that makes running any sort of model feasible. Through the 90s and noughties great strides were made but results were always disappointing and certainly not good enough to be used “in production”.

Ideas sat on the shelf, waiting for hardware to catch up.

The Importance of Scaling

Everything changed when computing power reached a threshold where neural networks could finally scale:

- GPUs, originally for graphics, allowed thousands of operations to run in parallel.

- Distributed computing let multiple machines train a single model together.

- Specialised hardware such as TPUs later on pushed the boundaries further.

These gradual changes started to address the core problem of the chasm between “What people hoped for” and “What was actually possible”. They started to learn richer patterns, deeper structures, and more abstract relationships. And this is why modern AI feels different – we have crossed a point where scaling hardware produces new behaviour, not just more of the same.

Why Computation Explains Modern AI

Many questions people ask about AI become clearer when viewed through the lens of computation:

Why did models suddenly become so capable around 2017–2020?

Because computation hit a level where the Transformer architecture could be trained at scale – it became possible on the available hardware.

Why do new models keep surprising us?

Because scaling laws show that as you increase compute, performance follows predictable curves – often revealing behaviours that weren’t explicitly programmed.

Why do different models behave differently?

Because they were trained with different computational budgets, affecting depth, nuance, and the richness of their internal representations.

Why does 2025 AI feel so far removed from 2020 AI?

Because compute is compounding exponentially. Each generation has been trained with vastly more operations than the one before.

Seen through this lens, AI stops looking like a sequence of unpredictable jumps and starts looking like a natural consequence of computational scaling.

What This Means in Practical Terms

Starting with computation removes mystery and replaces it with a simple, predictable principle:

As compute grows, capability grows.

And capability grows in ways that are hard to foresee until you reach the next scale.

This principle explains:

- why smaller models feel limited and brittle

- why larger models feel more general, fluent, and robust

- why training time, memory, and hardware choices profoundly shape model behaviour

- why AI progress is no longer linear but exponential

I have personally felt that understanding computation in this way has made everything else in the Eightfold Path becomes easier to understand.

My Connection to This Stage (1993–1997)

My own starting point with technology sits firmly in this phase.

Between 1993 and 1997, I was writing code, building small systems, and learning how computers behave at the most fundamental level – deterministic machines driven entirely by computation. Those early experiences shaped the way I still think about AI today: everything begins with what the hardware can do.

How Computation Sets Up the Rest of the Path

Step One is the bedrock. The next stages only make sense once the computational base is clear.

- Step Two looks at data – the second essential ingredient.

- Step Three introduces architecture – how models are structured.

- Step Four covers training – the process that turns computation + data into a learned model.

- Step Five onwards explore scaling, reasoning, tool use, and ultimately agentic behaviour.

Computation doesn’t tell the whole story, but without it, none of the later stages can be fully understood.

Key Idea

There is in growth and change in any market as new players come on the market, innovation happens, and we all get closer to customers to find out what they actually want. but there has always been a drag in this area that has held back progress and innovation despite enormous amounts of research. That brake has been computational power (for now ignoring some of the incredible innovations that have allowed us to leap forward at a greater speed, primarily Transformers).

Through these eight pages, I hope to show the parallels between the development of AI over the last few decades with my own work in this area. I believe it is the most exciting time to be working in AI right now, and I am looking forward to what is to come!

Summary

- Early computation established the limits of what AI systems could do.

- Intelligence was initially framed as symbolic logic and rule execution.

- Systems were precise but brittle, lacking adaptability and generality.

- This stage created the infrastructure and mental models for later advances.

- Stage Two introduces learning from data rather than hand-crafted rules.