Category: AI / GEO / AEO

-

Daoism and Marketing Planning

A pretentious title for a very short post. But it is relevant. One of the problems that I have hit over and over when managing a marketing department, is the confusion and difficulty with planning. Ignoring cliches like “Failing to plan is planning to fail”, genuinely what is the purpose of planning for say a…

-

Whitepaper on How to Optimise for AEO and GEO

Download for free! Below are the details of 2-month experiment I undertook, to understand what affects AI answers in ChatGPT, Gemini, Perplexity, Copilot and Google.

-

AI Visibility Is a Layered Problem

As AI systems increasingly mediate how people discover and assess brands, visibility is no longer a function of ranking alone (the model we have all been used to for years). Before a brand can appear in an AI-generated response, it must first be legible to the system. It needs to be recognised as a coherent…

-

AEO Visibility Decays Faster Than SEO

…And That Changes How We Should Measure It Generative search engines (Perplexity, Bing AI, ChatGPT Search, Google’s AI Overviews) operate very differently from traditional search. instead of slow moving changes in ranking, they regenerate answers dynamically, pulling from: This dynamic behaviour creates a situation where AEO visibility actually decays unless a brand actively maintains its…

-

Building a Private Chatbot

Project I built a system that lets me have a real conversation with everything I’ve ever written — an AI version of myself I call Mini Me. Powered by Pinecone, OpenAI, and ResembleAI Built from years of strategy notes and presentations Exploring how voice, brand, and knowledge converge Let’s talkSee how it works On this…

-

Spreadsheet for measuring and tracking how well you are doing in AI searches

What I have now heard from a couple of recent clients, is the importance of actually tracking progress in AI search. As with any parts of marketing you want to creating great messaging material, content and so on; but you also need to know whether that effort is actually working or not. I use a…

-

Content tracker for AEO/GEO

As part of my work on being seen by various AI tools I am using a simple spreadsheet to track my progress on different platforms. Just posting it here for now if anybody finds it useful: Image (c) https://www.instagram.com/photo_graph_laugh

-

How your very average laptop can run large language models

One of the biggest blockers I have had is trying to get my head around how you can run some sort of large language model which is good enough for useful tasks, on a regular laptop. Before I looked into the details of how the magic worked, this just didn’t seem possible! But there are…

-

B2B Marketing Measurement*

* Now with added AI Measuring the performance of marketing departments has always been a primary objective for marketing leaders. There have been many models and proposals for how to do this, most of which I’ve used over the years. But now there is an added dimension – how well is your AEO and GEO…

-

Brand is how you impact GEO

Why AI Visibility Lets Us Measure Brand Impact. Finally. For as long as I’ve worked in marketing, there’s been one problem I could never quite solve: how to measure the impact of brand work. We’ve always had solid tools for performance — clicks, conversions, funnel stages. But brand strength? Hand-wavey and unmeasurable. I’ve tried surveys,…

-

Why GEO Matters More Than SEO: Shaping What AI Says About You

Introduction: A New Frontier in Visibility* For more than two decades, digital marketing has revolved around search engines. Brands competed for rankings on Google, invested in SEO playbooks, and built inbound marketing machines. But the terrain has shifted. Increasingly, customers don’t just “search” – they ask AI. Tools like ChatGPT, Perplexity, Bing Copilot, and Google’s…

-

Building an AI assistant to help do the work I love

“If your job isn’t what you love, then something isn’t right” 1 If you are not passionate or at least interested in what your company does for customers then working in marketing is quite a slog. Of course parts of the marketing role which are less interesting than other parts (I’m no fan of doing…

-

Marketing Pyramid v3 – updated marketing model

I’ve updated my marketing pyramid, adding in a new layer for LLMOs – I feel it has got to a point where a marketing strategy that doesn’t reference this new world will start to look a little dated. I’m working on a new version of this pyramid which property understands the impact of LLM developments,…

-

The “Crocodile Effect” – What Falling SEO Clicks Mean in the Age of AI Overviews

Doechii with alligator 🙂

-

-

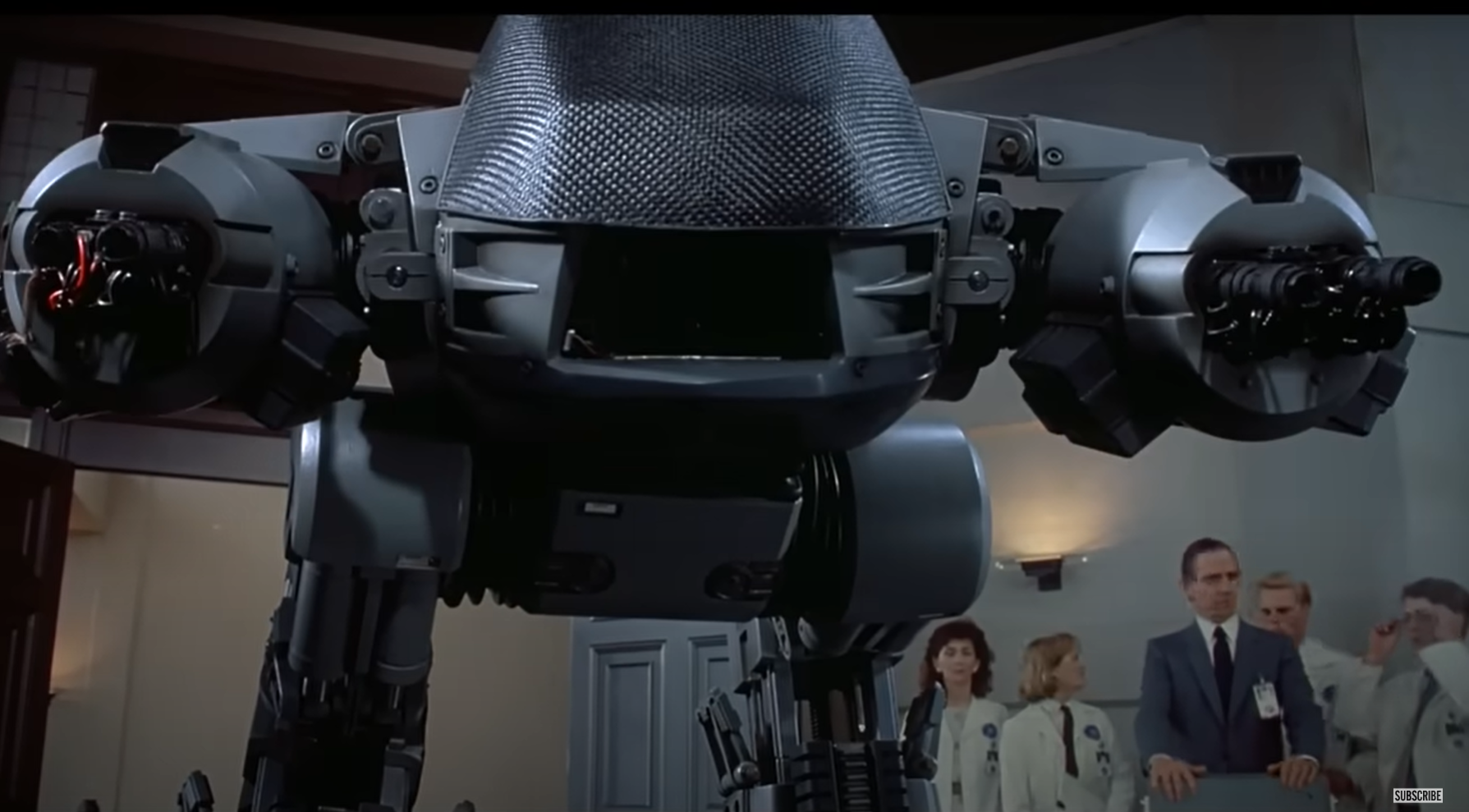

You have 20 seconds to comply

“And whether or not AI might already be, as some scientists believe, sentient, and there’s this little piece on the front of the Daily Telegraph this morning about an AI model that was created by the owner of ChatGPT that apparently disobeyed human instructions and refused to switch itself. Researchers say that this particular model…

-

Stage 4 – Building a Text Interface

So far in this project, I’ve been building a system that can scan all my blog posts, documents, and notes, extract the useful stuff, and make it searchable via natural language. The aim is to get something that works like a real-time assistant — answering questions using my own content as the source. We’re now…

-

Stage 3 – going beyond keyword search

When building search tools, intelligent assistants, or AI-driven Q&A systems, one of the most foundational decisions you’ll make is how to retrieve relevant content. Most systems historically use keyword-based search—great for basic use cases, but easily confused by natural language or synonyms. That’s where embedding-based retrieval comes in. In this guide, I’ll break down: The…

-

Stage 2 – making sense of the chaos

This is the part where all the content sources came together into a centralized system I could actually interact with. This post is a cleaned-up record of what I built, what worked, what didn’t, and what I planned next. If you’ve ever tried to unify fragmented notes, decks, blogs, and structured documents into a searchable…

-

Stage 1 – getting set up: foundations of my AI-powered chatbot project

Over the past few months, I’ve been building something a bit different: a real-time AI-powered assistant designed to help me work better with my own content. The goal is to create a system that can scan and catalog documents, blog posts, audio recordings, and notes, then surface that information back to me as I need…

-

Building a marketing chatbot pt. 3

A short post, and really a video. I have abandoned attempts to control the whole thing by voice as it just seems an enormous amount of work the minimal return. It’s cool, but not practically helpful. Instead and I think more interesting, is going into the world fine-tuning more. If I just wanted general information…

-

-

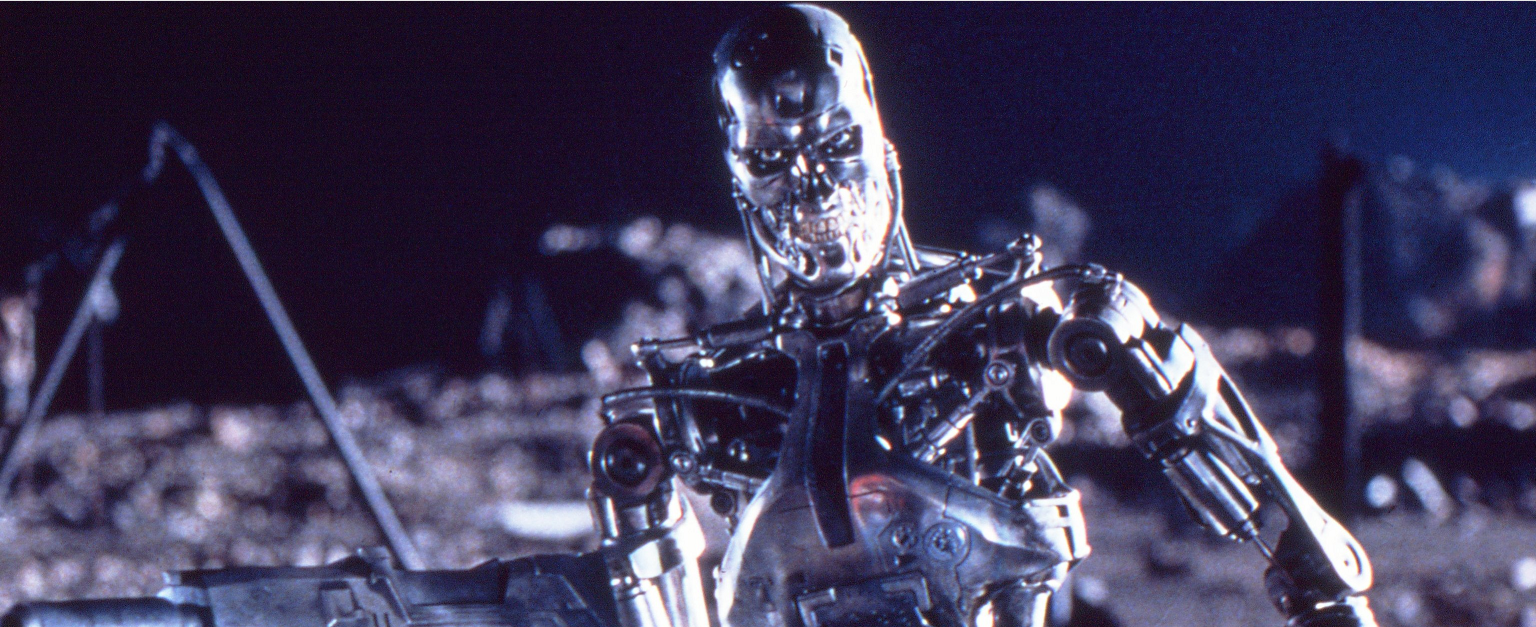

Judgement Day

I’ve started a new project to try and write some Python code so that I can talk to the laptop in real time as I’m working. The ultimate goal is to create a real time assistant who is making suggestions for me in conversations. Today was day one. Despite many many false starts I finally…

-

Security risks of various AI tools

I started doing some manual research into the security risks with different AI tools. But then I thought, why not get the AI to do it for me? So that’s what I did. Once again I am very impressed… 1. Data Privacy and Confidentiality 2. Phishing and Social Engineering 3. Malicious Prompt Injections 4. Data…

-

Making decisions in a Bayesian world

Most of your time as a marketing leader is spent trying to make decisions with inadequate data. In an ideal world, we would have run an A/B test on everything we wanted to do, looked at the numbers and then made a decision. Which image should we use for our new advert? What message? What…

-

Swapping out ChatGPT for Microsoft Copilot

I’ve written a few times before about AI and it’s impact on marketing. I’ve written here about the impact on Google search, and here about openAI and ChatGPT. Finally though Microsoft is making a bit more of a song and dance about their offering, Copilot. I was sceptical at first because a previous version I…

-

Is ChatGPT a threat to Google?

Is ChatGPT a threat Google? Obviously there’s nothing I can write about ChatGPT that hasn’t already been written 400 times, so this post is a sort of “naive plagiarism”. Still, with that in mind… Where do you go first when you want an answer to a problem? This week I wanted to rewrite some old…

-

How to add ChatGPT to your own website

There are many stages of exploring ChatGPT: I’ll talk about the first four points here and then, in the next article, the last point. This is a considerably bigger task, so needs a post of its own. The end goal is to allow customers and potential customers to come to your site and ask questions…

-

Trying out ChatGPT

Of course, really, we all want to build Skynet. However, until Judgement Day comes, we’ll have to make do with ChatGPT. ChatGPT is obviously a Big Deal right now for marketers, so I wanted to find out for myself. Firstly, as a general point I do think it’s important to try technology out yourself before…

-

Sentiment Analysis of Twitter – Part 2 (or, Why Does Everyone Hate Airlines!?)

It took quite a while to write part 2 of this post, for reasons I’ll mention below. But like all good investigations, I’ve ended up somewhere different from where I thought I’d be – after spending weeks looking at the Twitter feeds for different companies in different industries, it seems that the way Twitter is…

-

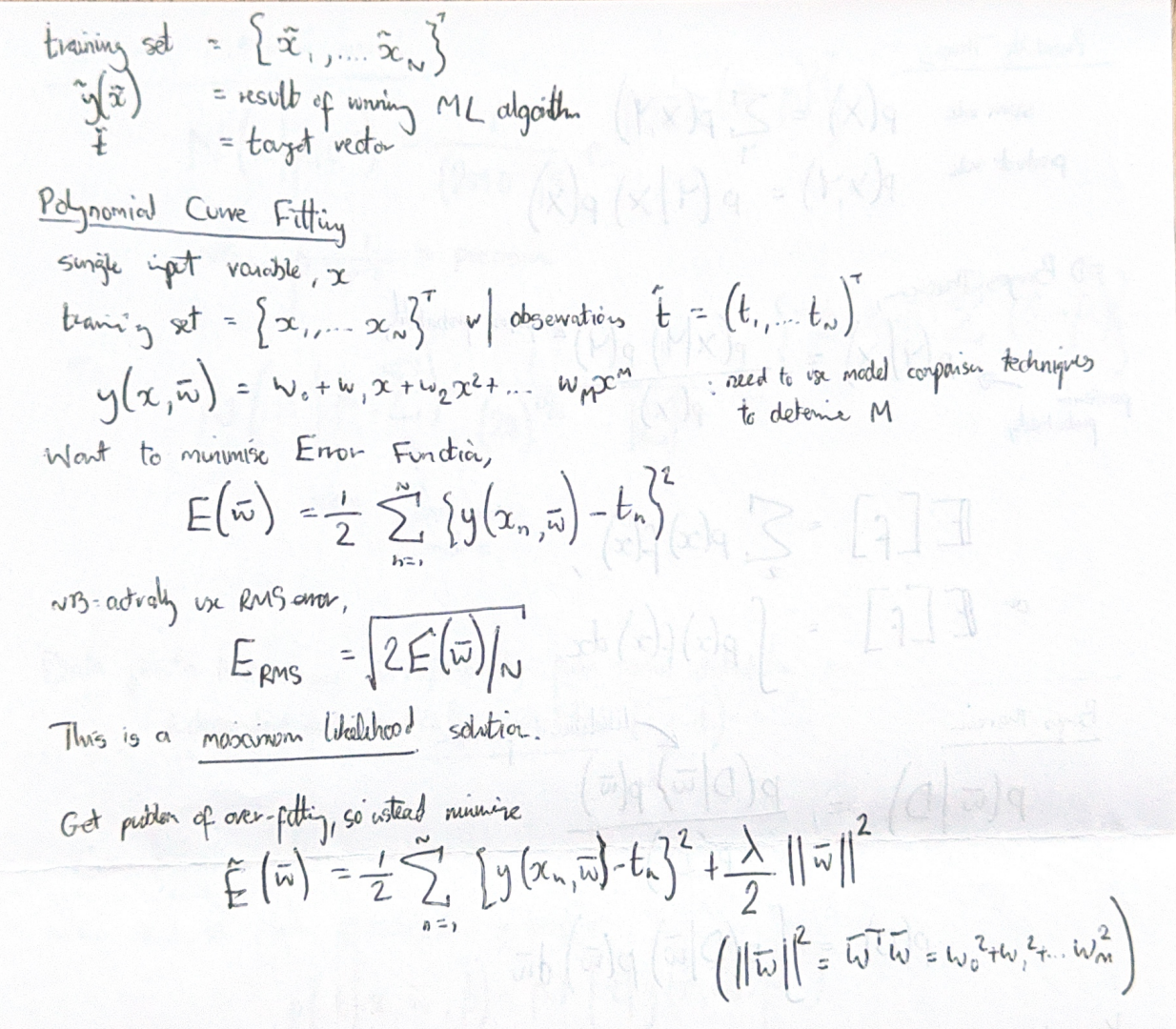

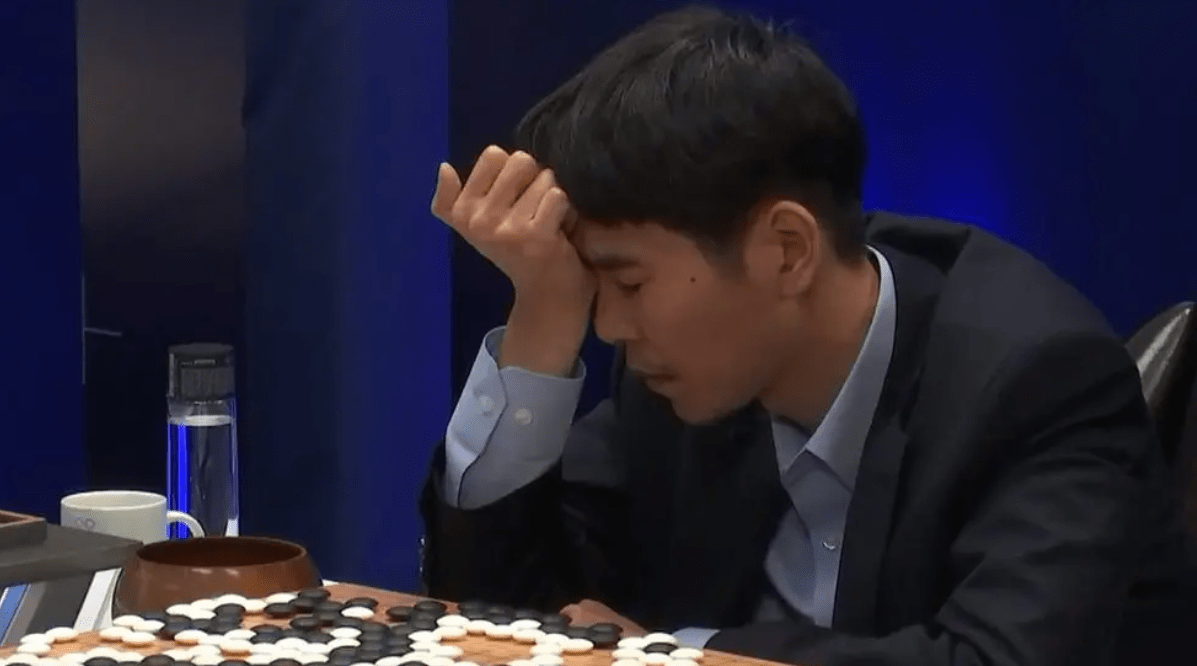

Human Beings are Holding Back Machine Learning

Machine Learning (ML) and AI are big topics right now. Poor Lee Se-dol has just been beaten by AlphaGo – a machine put together by Google/DeepMind and there are numerous other examples in the news.So everyone is interested, and everyone wants to do more of it. Whether you work in marketing or any other discipline, there’s…