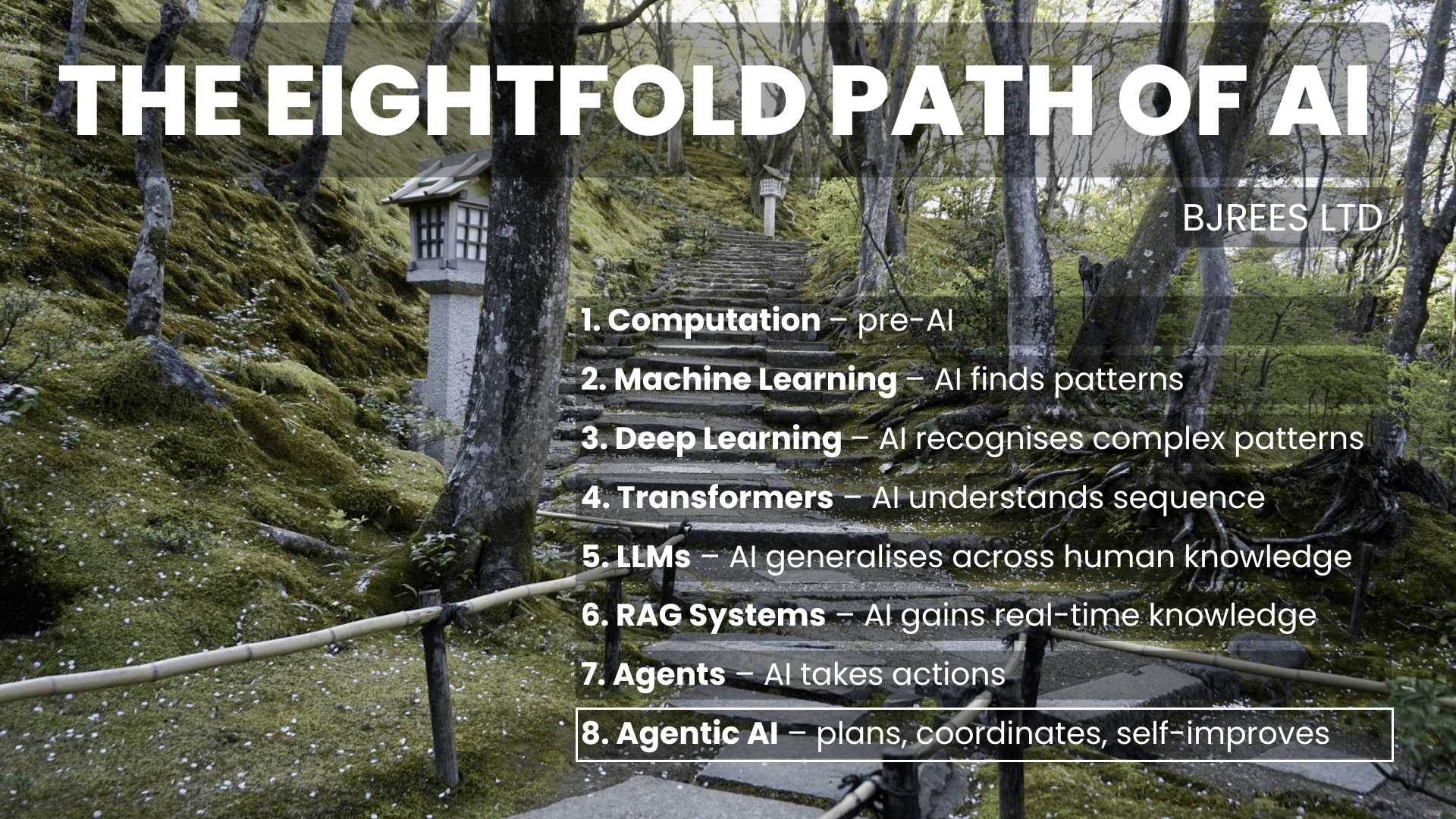

Step Eight: Agentic AI

When systems stop waiting for prompts and start initiating work (!)

By the end of Stage 7, AI systems can be reliably orchestrated. Tasks are explicit, execution is deliberate, and humans remain in the loop. The system does what it is told, when it is told.

Stage 8 is the next shift. It is the move from orchestration to autonomy.

Agentic AI refers to systems that can pursue a goal with limited supervision. They do not simply respond to prompts. They can plan, act, observe outcomes, and continue operating over time. The defining feature is initiative.

This is not better prompting. It is a change in system behaviour. The AI is no longer a tool you drive step by step. It becomes a system that can decide when work needs to happen and how to progress it.

The boundary after orchestration

Orchestrated systems are powerful, but they still depend on humans to initiate each step. That constraint is often intentional. It limits risk and keeps responsibility clear.

It is also restrictive – as soon as you want systems that can monitor, follow up, or complete longer-running work without constant human input, you cross into Stage 8.

This boundary is pretty simple:

Who initiates the next action – the human, or the system?

When the answer becomes “the system”, you are truly building agents.

From workflows to autonomous loops

The Targaryen fleet heading for Westeros

The capability shift in Stage 8 is behavioural rather than architectural.

Agentic systems can:

- Initiate work without being prompted each step

- Maintain a goal over time and decompose it into sub-tasks

- Observe outcomes and revise plans

- Continue operating until a stopping condition is met

The underlying models may be familiar. What changes is how they are embedded in a persistent loop, with memory and state.

So in a sense this isn’t really AI but the implementation of agency.

What makes a system agentic

Agentic behaviour emerges when a model is wrapped in a control loop:

- Goal

- Plan

- Act

- Observe

- Update

- Repeat

This loop is not handled by the model alone. It requires external systems to manage state, retries, failures, and continuation over time.

As a result, “agent harness” design becomes core engineering work. Memory, context limits, tool permissions, and cost controls matter as much as model quality.

Why this stage emerged

Stage 8 emerged from operational pressure – really, work is rarely one-shot. It involves iteration, follow-up, and recovery from failure. Once organisations move beyond demonstrations, repeatedly prompting a system becomes inefficient.

They want systems that can continue, monitor, and escalate only when needed.

Autonomy is therefore about throughput, not replacement.

I also found that the volume of work needed to set up these processes without using Agentic AI was very significant. I will be implementing this as needed but crucially, only when needed. I have always liked the prin that for any complicated piece of work but it should be done manually first, only automating or “Agentify-ing” when you’ve seen it work through by eye.

Governance and the problem of spend

Stage 8 is where capability and risk converge most sharply.

If a system can decide what to do next, it can also decide to keep going. That has direct economic consequences!

Autonomous agents can:

- consume far more compute than expected

- trigger cascades of tool calls or API usage

- run indefinitely if stopping conditions are unclear

- generate costs without obvious human visibility

This makes governance non-negotiable, a particular problem if you are running code overnight while you sleep.

In practice, agentic systems require explicit spending controls: budgets, rate limits, scoped permissions, and clear termination rules. Without these, autonomy quickly turns into uncontrolled cost.

The question is no longer just “Can the system do this?”, but “Who is accountable for what it spends while doing it?”

Why Stage Eight matters

Stage 8 changes what AI is inside an organisation.

It moves systems:

- from assistance to delegated execution

- from one-off outputs to continuous operation

- from tools you run to systems that run alongside you

This is where AI begins to participate in processes rather than merely supporting them.

Constraints and open tensions

Agentic systems remain constrained by imperfect reasoning, brittle tool interactions, and unclear evaluation criteria. It is often hard to know whether a system is finished, correct, or safe.

As a result, the field is converging on a pragmatic position. Successful systems favour bounded autonomy, simple patterns, and explicit checks over unrestricted agents.

Autonomy works best when it is deliberately limited (I’d suggest both for machines and humans alike..)

What the Eightfold Path has been building toward

Stage 8 is the culmination of the path.

Once you have compute, data, deep learning, transformers, scaled models, retrieval, and orchestration, autonomy becomes possible.

But autonomy is not the finish line. It is the beginning of a new discipline: designing systems that can act independently while remaining aligned with human intent, safety boundaries, and economic constraints.

Closing reflection

The Eightfold Path of AI is not a list of technologies. It is a story about capability emerging from foundations, drawing parallels between the technology has evolved over the last couple of decades and my own work.

Each stage enabled the next, until systems could finally initiate and persist.

Modern AI is not magic, it is engineering layered on engineering.

I also found a lot of parallels with some early work I did on genetic programming and evolving intelligence from the amazing book “Collective Intelligence“:

“Instead of choosing an algorithm to apply to a problem, you’ll make a program that attempts to automatically build the best program to solve a problem.”

This is ultimately what we are trying to do here – these ideas have been around for decades and I have been following them for a lot of that time.

But he final challenge is not making systems more powerful. It is deciding under what conditions they should be allowed to act – and how much they are allowed to spend while doing so. that is the primary task for you if implementing this 8-fold path – working in tandem with the machines, but also understanding exactly what you are actually doing at each stage.

Summary

- Stage Eight introduces agentic AI: systems that can initiate actions rather than waiting for explicit prompts.

- Agents operate within goal-directed loops, maintaining objectives, planning steps, and acting over time.

- Control shifts from step-by-step human initiation to system-led continuation within defined boundaries.

- State, memory, and stopping conditions become critical system concerns as execution persists autonomously.

- Governance is essential to manage risk and spend, requiring explicit limits on tools, duration, and cost.