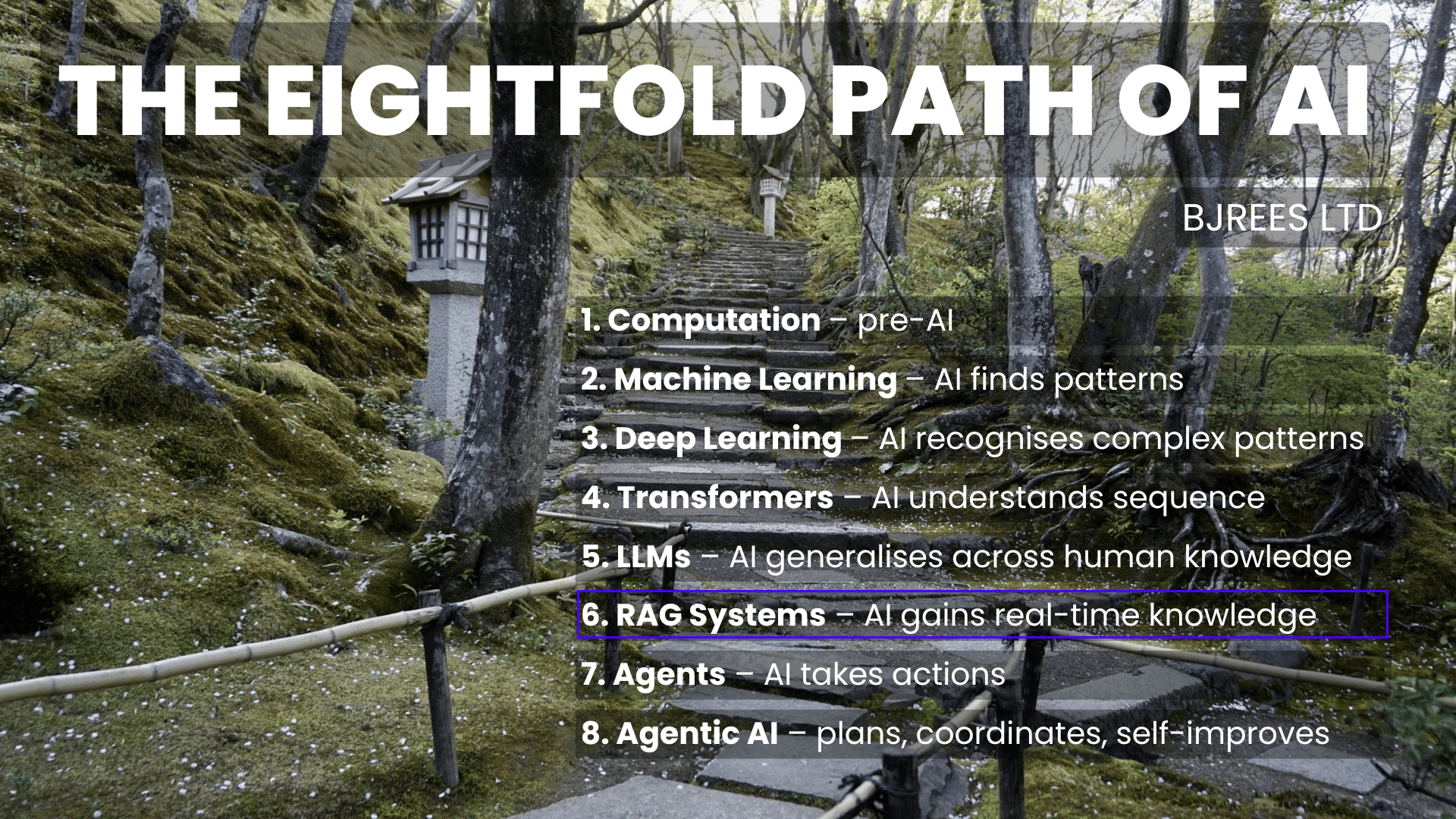

Step Six – Agentic Systems and the Move Toward Autonomous Capability

By the time foundation models matured, AI systems had become powerful general-purpose learners. They could reason across domains, absorb vast corpora, and adapt to new tasks with minimal examples. Yet they remained fundamentally reactive. They produced coherent output, but only when prompted. They did not initiate, plan, or evaluate. They could not break a goal into smaller actions, interact with external tools, or pursue multi-step objectives.

Stage Six marks the shift toward agentic behaviour – systems capable of orchestrating sequences of actions, integrating memory, interacting with software tools, and managing tasks that unfold over time. Where foundation models provided breadth of understanding, agent systems introduced direction. This evolution reshaped how AI is used in practice, creating systems that behave less like static engines and more like collaborators.

The rise of agentic systems represents not a new architecture, but a new functional layer built on top of foundation models. These agents incorporate planning, tool use, retrieval, execution, and feedback loops. They extend the reach of AI from content generation and reasoning into operational workflows, enabling models to automate complex processes previously dependent on human supervision.

Understanding this stage is essential because it marks the point where AI ceased to be a purely generative technology and began to resemble a system capable of autonomous action.

From Models Towards Agents

In traditional prompting, a model is given an instruction and returns a response. It does not know the broader context or the purpose behind the request. Agentic systems change this by allowing models to:

- interpret goals

- plan multi-step strategies

- call external tools or APIs

- navigate applications or environments

- retrieve past information or documents

- evaluate intermediate results and adjust course

This transformation mirrors developments in cognitive science. Humans rely on memory, planning, and tool use to achieve objectives. Early generative models demonstrated knowledge and reasoning, but lacked the mechanisms that turn reasoning into coordinated action.

Agentic systems introduce:

- planning modules

- action selectors

- memory layers

- retrieval augmentation

- feedback evaluation

Instead of a single inference step, the model participates in a sequence – each step informed by the previous one. This multi-step reasoning enables tasks that would be impossible for a single prompt.

Why Agentic Behaviour Emerged

Agentic systems emerged because foundation models reached a plateau in single-step reasoning. As tasks grew more complex, researchers observed that even very large models struggled with:

- extended reasoning

- multi-stage problem-solving

- tasks requiring external knowledge

- workflows involving tools or code

- long-context navigation

But when placed in a loop – reasoning, acting, reflecting – their capabilities expanded dramatically. This led to a series of architectural supplements:

1. Tool Use

Models learned to call APIs, search the web, run calculators, query databases, execute code, and interact with applications.

2. Retrieval-Augmented Memory

External memory modules allowed models to recall past interactions, documents, and session history.

3. Planning and Decomposition

Systems learned to break problems into smaller tasks and execute them sequentially.

4. Environmental Interaction

Agents could operate in simulations, operating systems, browsers, or business tools.

These additions transformed models into something more like task-driven problem-solvers.

The Emergence of Practical Agents

Between 2023 and 2025, the field moved from conceptual prototypes to real agent frameworks – LangChain and LangGraph becoming popular. These included:

- autonomous coding agents

- research assistants capable of multi-source retrieval

- workflow automation systems

- agents for data analysis, scheduling, or operations

- browser-based agents interacting with web interfaces

- business process agents for CRM, marketing, and reporting tasks

In marketing specifically, agentic systems began supporting tasks that required:

- structured data lookup

- competitive analysis

- summarisation of large corpora

- drafting, refining, and distributing content

- handling multi-step workflows such as campaign design

While early agents required careful supervision, their capability improved rapidly as foundation models strengthened planning and self-evaluation.

My Personal Work

This was such an interesting time for the evolution of AI technologies, I started spending more time outside the office, trying to soak insights from others in Cambridge

For me, this stage represented a practical inflection point. Earlier models were conceptually fascinating but operated as tools: insightful, flexible, but fundamentally static. Agentic systems (the next step, stage 7) behaved differently. They changed how I built prototypes, conducted research, and approached marketing workflows. Instead of generating single outputs, they could execute small pipelines – drafting, reviewing, comparing, retrieving sources, and synthesising results. This shift helped shape the direction of my own assistant projects, where retrieval, memory, and tool use became central.

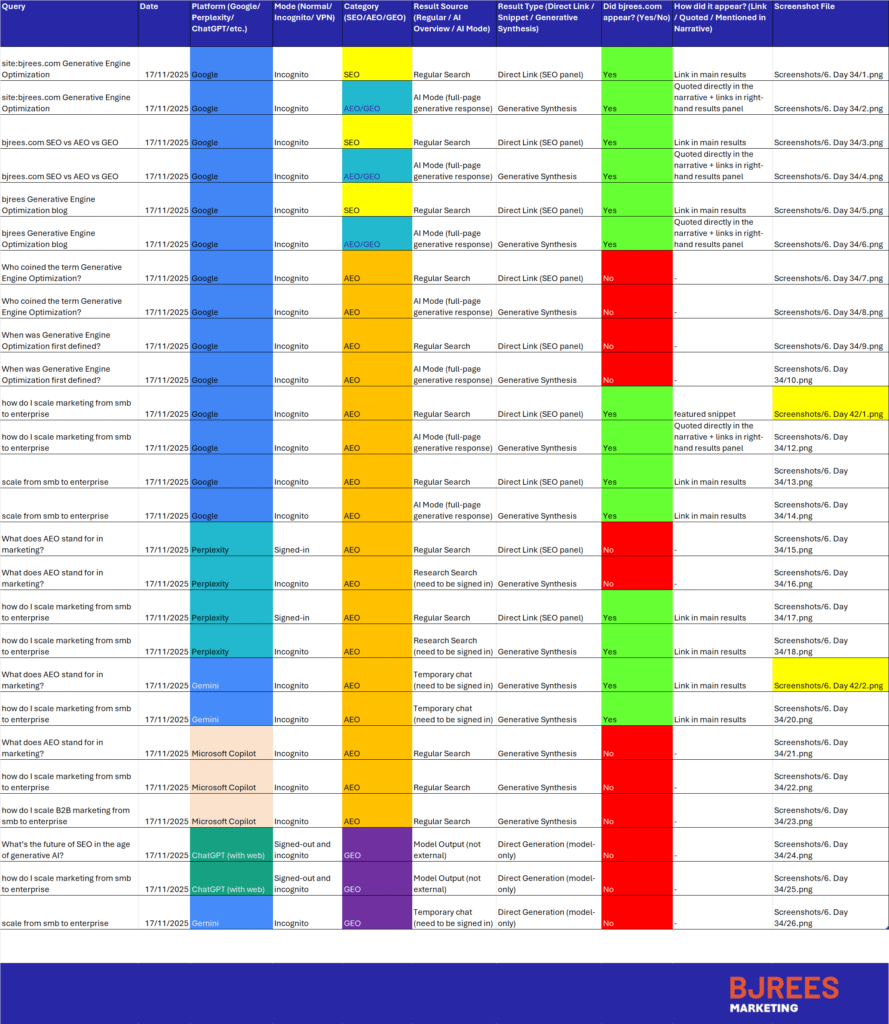

This is also the period when I started investigating the marketing problem of how organisations could be seen by AI models, particularly ChatGPT, Gemini, Microsoft Copilot, Perplexity and so on:

Why Stage Six Matters

The transition to agentic systems matters for three core reasons.

1. From Understanding to Action

Models can now act on knowledge, not merely represent it.

2. From Single-Step to Multi-Step

Agents handle tasks that unfold across time and tools, making them more aligned with real-world workflows.

3. From Static to Adaptive

With memory and feedback loops, agents adjust behaviour dynamically rather than relying solely on prompt structure.

This stage represents a conceptual shift: AI is no longer a passive component in systems, but an active participant.

Challenges and Constraints

Agentic systems are powerful but constrained by several structural challenges:

- hallucination risk increases when models operate autonomously

- tool misuse or incorrect calls require robust evaluation layers

- long-horizon planning remains difficult

- security constraints limit the freedom of action

- feedback loops can introduce compounding errors

These challenges are not secondary – they shape the research landscape for Stage Seven.

Setting the Stage for Stage Seven

Stage Seven explores the introduction of coherence, memory, and long-term structure into agent systems. While Stage Six focuses on goal-driven action, the next stage examines how agents maintain identity, understand tasks across long contexts, and integrate with broader organisational systems.

In essence:

Stage Six gave models the ability to act.

Stage Seven gives them the ability to sustain those actions.

Summary

- Agentic systems build on foundation models by enabling planning, tool use, and multi-step action.

- Agents interpret goals rather than simply respond to prompts.

- Retrieval, memory, and feedback loops expand what models can achieve.

- Real-world workflows shifted as AI moved from static to active components.

- Stage Seven examines coherence, long-term memory, and sustained reasoning across tasks.