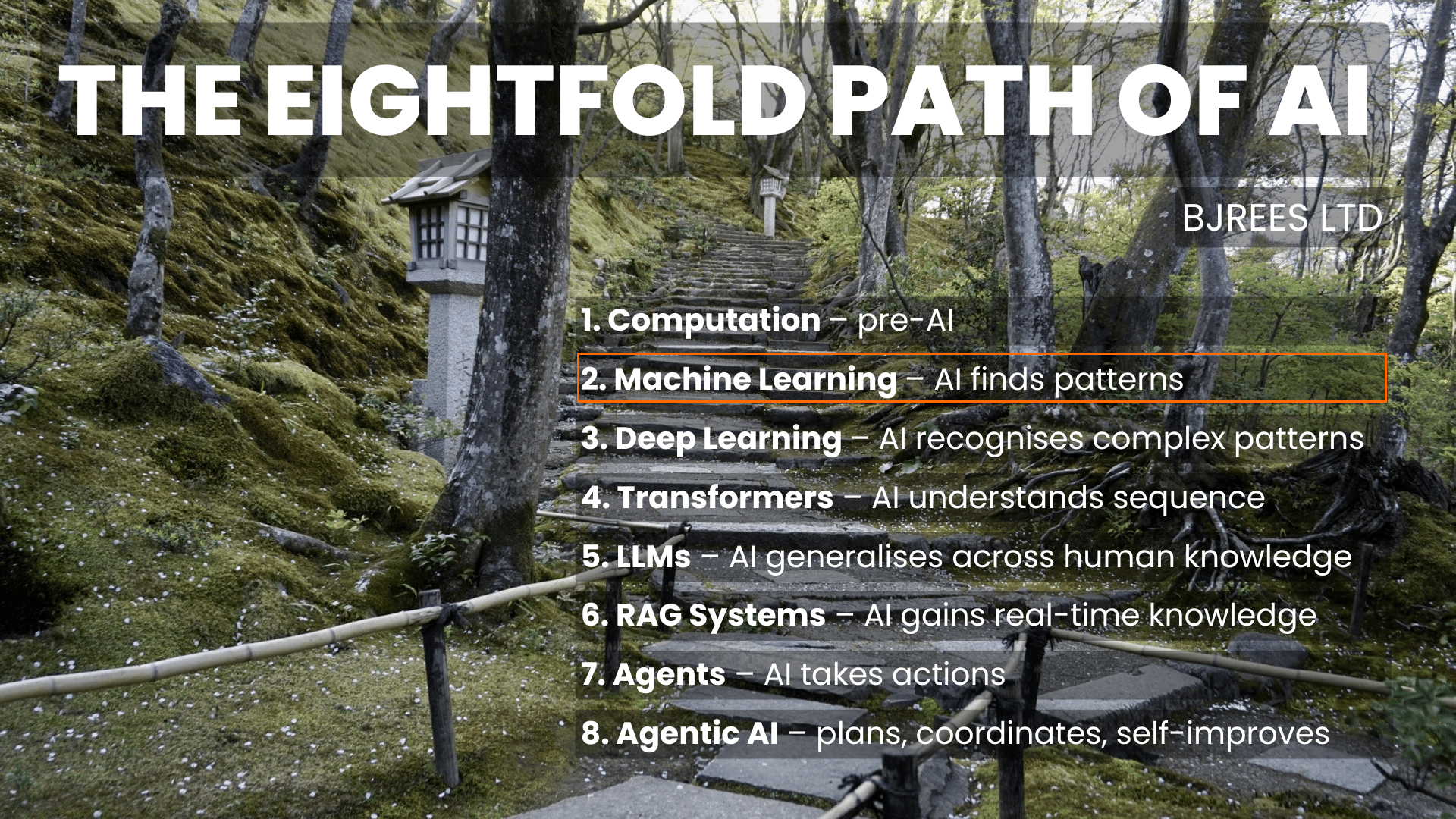

The Eightfold Path of AI

Step Two – Data and Pattern Recognition

The first stage of the Eightfold Path showed how computation set the outer limits of what AI could achieve. Once hardware reached a level where models could run at practical scale, a second ingredient became essential: data.

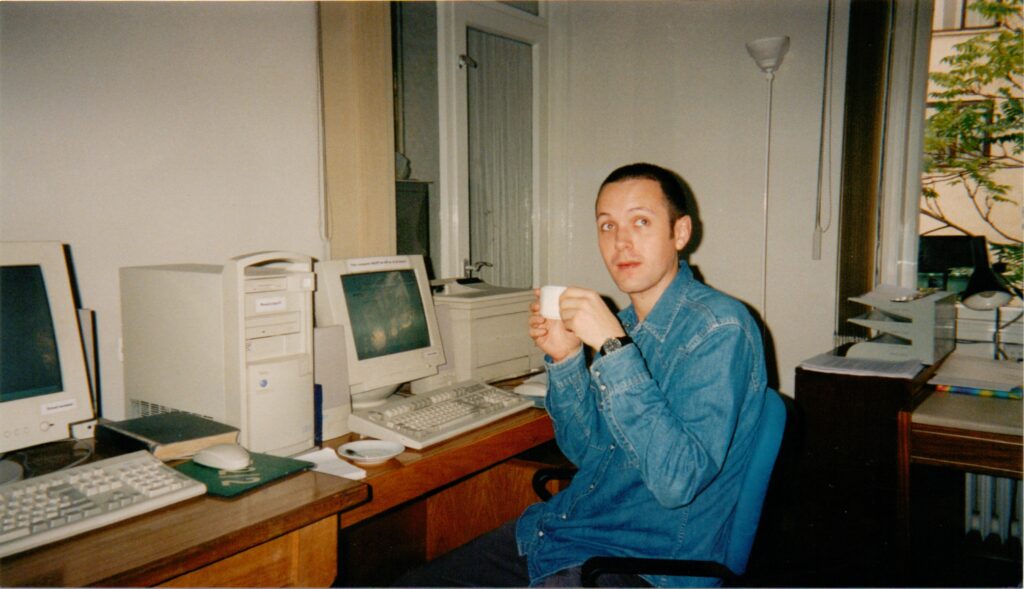

From the late 1990s onwards, the centre of progress shifted from hand crafted rules to statistical learning, where computers were no longer told what patterns to expect but were given examples and asked to find structure for themselves. This was the moment when AI began to behave differently – it moved from executing logic to extracting regularities. It was also the period in which my own academic work took shape, studying Information Processing and Neural Networks during my MSc between 1998 and 1999. The field felt promising but constrained. Models were small, datasets were limited, and learning was narrow. Yet something foundational had begun. Data created a path toward systems that could adapt to evidence rather than depend entirely on human specification.

c. 1998 – studying my MSc in Information Processing and Neural Networks

From Rules to Learning

Traditional machine learning is best understood as the first real step away from symbolic programming. In symbolic systems, behaviour is derived from explicit instructions: if you see this, do that. Machine learning inverts this relationship. You provide examples and let the model infer the rule. This covered early areas, like:

- spam classifiers

- fraud detection

- churn prediction

- anomaly detection

- decision trees

- regressions

- early neural networks

This shift produced early classifiers that became familiar across industry. Spam detection mapped statistical signals in emails. Fraud detection identified unusual sequences in transaction logs. Churn prediction used behavioural histories to estimate who might leave a service. Regression models linked features to outcomes, while early neural networks tried to capture non linearity that classical statistics struggled to describe. None of these models resembled modern generative systems, but they introduced a crucial idea. Learning could be driven by data rather than handcrafted logic.

How Statistical Learning Behaved

To understand why this mattered, consider how learning behaves in statistical systems. A model observes examples and searches for the simplest structure that explains them. At this stage, the structure was shallow: linear combinations, simple thresholds, decision trees with a limited number of branches. What appeared intelligent was often pattern frequency.

The model learned what was typical and what deviated from that baseline.

This meant machine learning worked well for domains where patterns were stable and measurable. It also meant the models were reactive. They could only recognise what they had seen before. The generality associated with modern AI was not yet possible because the available representations were thin.

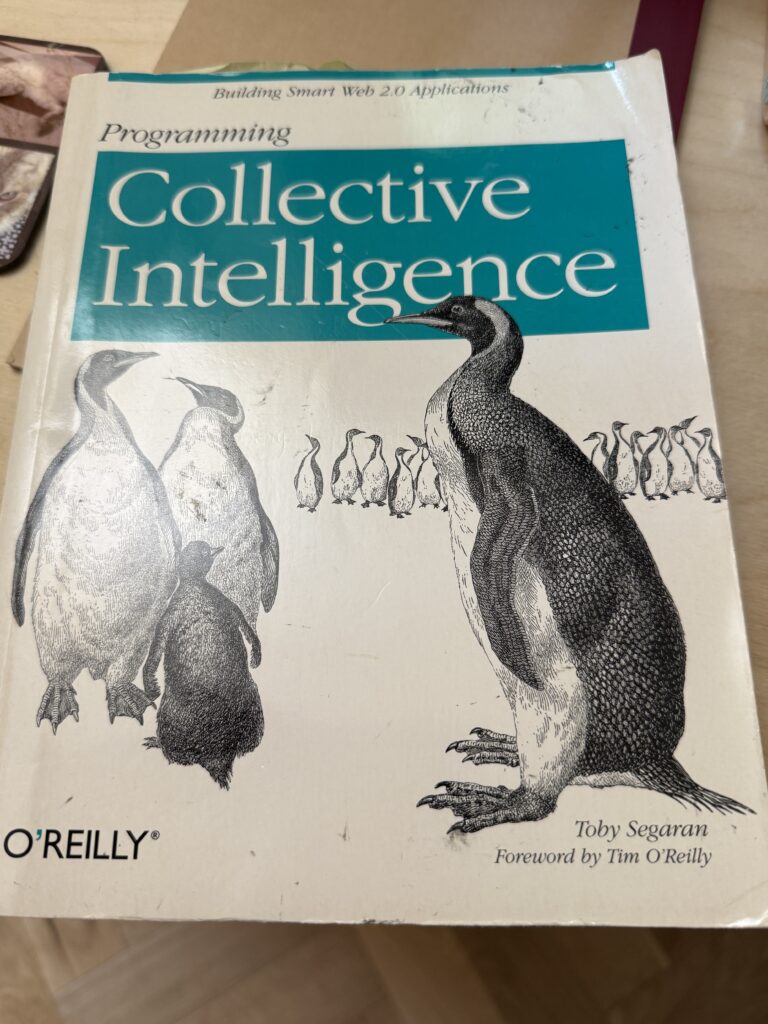

https://www.amazon.co.uk/Programming-Collective-Intelligence-Building-Applications/dp/0596529325 – for me, still one of the greatest books on programming early AI and really understanding what you’re doing

The Constraint of Early Data

The constraint was the data itself. In the 1990s and early 2000s, most digital systems produced small, structured datasets. Enterprise databases, web server logs, and early CRM systems generated observations that were tidy but limited in scope. Machine learning worked within these boundaries.

Algorithms depended on labelled datasets, and labels were expensive to produce. Consequently, the field leaned heavily on supervised learning, where ground truth was known in advance. This made the models highly task specific. A churn model could not be repurposed for fraud detection. Each domain required its own dataset, its own features, and its own training pipeline. The result was a collection of narrow systems that showed competence without flexibility.

The Shift to Statistical Structure

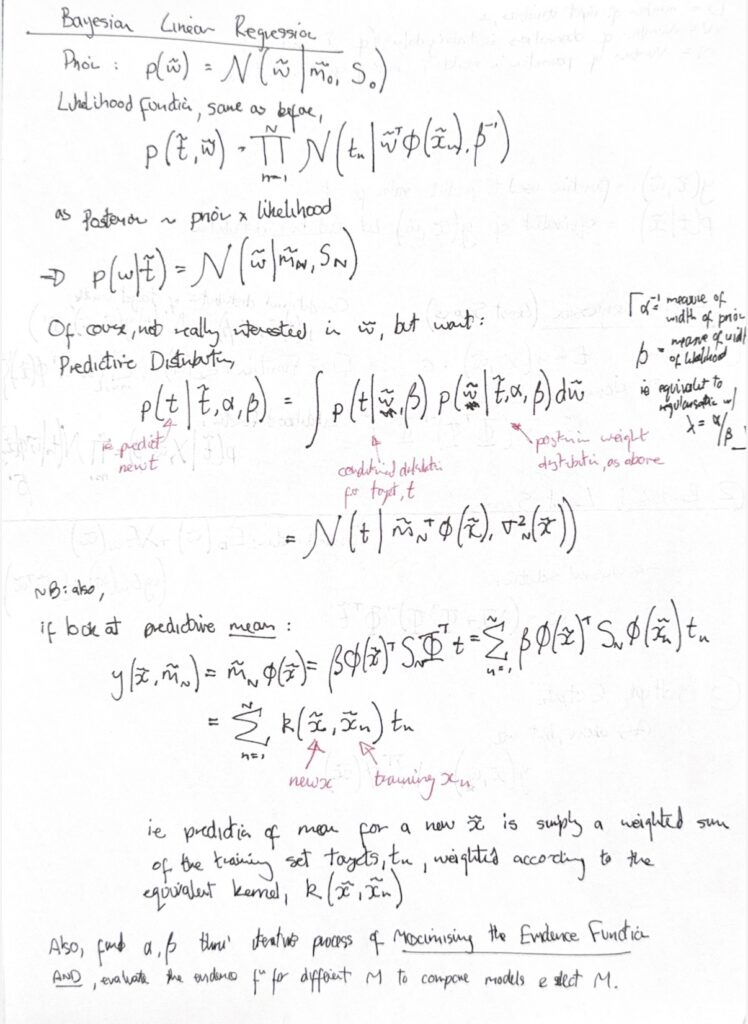

Yet even in this constrained environment, traditional machine learning introduced an important mental model for understanding AI. Learning became a process of discovering statistical structure in data. In Bayesian terms, the model began with weak priors and adjusted its parameters based on observed evidence.

Although these systems lacked the richness of modern embeddings, the principle was already visible. Evidence could change behaviour. Computation made it feasible, and data made it meaningful. This parallel with human cognition created the conceptual bridge that later stages of the Eightfold Path build on.

Machine Learning in the World of Work

Practically, traditional machine learning became embedded in business operations because it mapped well onto predictable processes. Models scored leads, detected anomalies, priced risk, and estimated demand. They offered statistical forecasts rather than generative insight. Their strength was repeatability. Their limitation was context. A model trained on one dataset could not extrapolate far beyond it, and generalisation was fragile. This explains why early AI felt both promising and underwhelming – it delivered value in constrained settings but did not behave like a general reasoning system.

Preparing the Ground for What Came Next

Historically, this period prepared the ground for everything that followed. The industry needed to understand how to collect, store, and label data at increasing scale. Organisations built data warehouses, logging pipelines, and reporting layers. These were not designed for deep learning, but they created the infrastructure from which deep learning could later emerge. When larger datasets became available in the mid 2000s and early 2010s, especially with the rise of the open web, a new era was unlocked. But at the time, the significance was not obvious. It felt like incremental progress rather than the beginning of a transformation.

My Experience During This Stage

My own experience during this period reflects that ambiguity. Studying neural networks in 1998 and 1999, it was clear that the mathematical ideas were powerful, but the available data and compute restricted what could be demonstrated. Models struggled to converge. Training processes were brittle. Results were often more academic than practical. In hindsight, the field was waiting for both larger datasets and architectural breakthroughs that had not yet arrived. Nonetheless, this period established an important intuition. Learning systems are only as capable as the data they see. Computation enables possibility. Data enables structure.

My old notes from work at King’s College, London

Why Stage Two Matters

Understanding this stage clarifies why modern AI behaves the way it does. When you scale both compute and data, representations become richer, and models begin to generalise across domains rather than within them. But that leap only makes sense when you recognise its starting point. Stage Two marks the first moment when computers began to learn from experience rather than instruction. It marks the transition from rules to patterns, from logic to statistics, and from expert designed systems to evidence driven ones. The next stage introduces the architectures that made this learning deeper, more expressive, and ultimately transformative.

Summary

- Machine learning shifted AI from rule-based systems to pattern recognition.

- Early models were narrow, relying on small datasets and handcrafted features.

- Statistical learning introduced the idea of evidence-driven behaviour.

- Data constraints limited generalisation but prepared the way for deep learning.

- Stage Three introduces richer representations through deep neural networks.